Each year the Hamlyn Centre for Robotic Surgery puts together the pre-eminent deep technical conference looking at the future of surgical robotics. Our digital surgery team at Cambridge Consultants were honoured to play a role yet again at the Hamlyn Symposium on Medical Robotics 2023. We contributed to panel discussions, judged the surgical robotics challenge, and had the opportunity to deliver some of our insights and analysis on the pivotal role data will play in the realisation of digital surgery.

I am always amazed by the energy and enthusiasm the team pulls from Hamlyn as they meet new colleagues and connect with old friends – all with the common goal of pushing the limits of surgery forward together through advanced technology. They usually leave with a renewed fervour to go solve the biggest challenges standing in the way of our vision to improve surgical care. They certainly have a host of new ideas and topics to engage or debate with the rest of our team at Cambridge Consultants about what lies ahead of us.

In meeting with the team this year, there were a multitude of insights gained over the few days in London, but two topics stood out that capture some broad trends in the digital surgery market as well as some of the deep technical opportunities in front of us. Here’s our take on them.

The lens of Human-Machine Understanding and Human Factors

By Michelle Lim and Tim Phillips

The theme this year was Immersive Tech: The Future of Medicine, but there was less emphasis on this than we expected. Does this reflect a gap between expectation and reality? Or is it a realisation that there are other technologies needed to drive the insights making immersive technologies clinically relevant and impactful? Enter AI.

There is a lot of optimism in the role AI could play in the future of healthcare and surgical robotics companies. Regulatory caution aside, the ability of AI to augment human decision making and exponentially drive surgeon training forward has shown tremendous promise.

As we look to the future, features like error detection, tool tracking, anatomical identification and expertise classification could remove the fear associated with those two pervasive letters. They could also drive the realisation that these algorithms can optimise processes, improve decision making and reduce complications that overall could improve surgical care.

Looking beyond the operating room into what has already been done in diagnostic imaging and applied at the point of care, there are plenty of unmet needs. There are also value add use cases where AI could automate simple existing processes reducing the negative impacts of the dwindling human resource in healthcare globally.

And to that point there was a discernible shift in the tone of this year’s presentations and workshops away from solely deep technical innovation and into how those advances are aligned to make sure they address the needs of the various stakeholders. In short, a renewed focus on recapturing the value proposition of surgical robots to improve patient care.

One could tell a critical eye is being placed on whether these innovations are truly improving care and meeting the needs of the greater healthcare system. Or whether, on the other hand, academic pursuits that are still focused only on precision, accuracy and reproducibility at a tremendous expense. And while the definition and use case associated with digital surgery was still cloudy, the need for data and insight platforms to help drive decision making in the robotic system certainly was not.

The promise of autonomous surgical systems seems closer now than the science fiction of years past, but the path to get there in the operating room may actually begin outside it. Fascinating product development out of SDU in the Netherlands showed a robotic development arising out of the need to diagnose rheumatoid arthritis sooner without the bottleneck of humans to drive the diagnostic platform.

Combining ultrasound with a robotic arm and an easy-to-use interface created an entirely new point of care diagnostic setting where data was then tracked to a citizen’s social security card. This is versus the often-cumbersome EHR that change from one location to the next. Will we see more use cases like this in robotics serving a present medical need outside surgery? We hope so! In any event, exciting times with tremendous promise with a whole host of difficult challenges to overcome.

Going deep down the mechatronics rabbit hole

By Alex Joseph

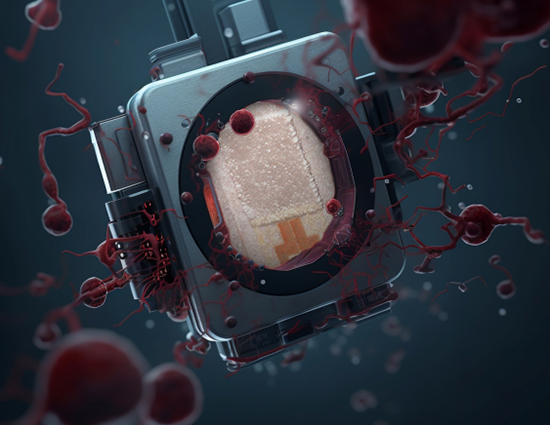

Flexible Surgical Robotics (FSR) has witnessed remarkable advancements in recent years. We’re seeing the potential to revolutionise interventional care by allowing surgeons to navigate to anatomy that was previously challenging or impossible to reach.

Additionally, advancements in machine learning and imaging technologies are maturing into increased research into the autonomy of FSRs. However, there are still some major challenges to overcome before we see these systems and concepts translate to industry. They include reliable and precise control, non-intuitive steering, shape sensing, lack of clinical training data and regulatory pathways.

In his introductory talk at the ‘Autonomous flexible surgical robots: where we are and where we are going’ workshop, Dr Di Wu used the comparison of autonomy in surgical robotics and self-driving cars. He stated that the workshop would mostly focus on level 2 autonomy, known as ‘partial’ or ‘task autonomy’.

This article “Medical robotics—Regulatory, ethical, and legal considerations for increasing levels of autonomy” illustrates the different levels of autonomy as mapped to robotic surgery.

During one of the workshop discussions the panel was asked: ‘What are the biggest technical gaps to achieving autonomous FSRs in clinical use?’ Interestingly, all the panellists gave the same answer: sensing.

While it’s undeniable that sensing is an important aspect of an autonomous system, we believe that it’s a development of contextual understanding that’s required to advance task autonomy. Sensing is one of the puzzle pieces here but sensing data by itself will not result in a step-change in autonomy.

Control and navigation algorithms, clinical understanding and computation are all needed to create a system that can achieve increased levels of autonomy. Take, for example, the challenge of controlling concentric tube robots. Due to their kinematic design, concentric tube robots can’t always move directly from one point in their workspace to another without retracting the tubes, rotating, and re-extending.

Dr Christos Bergeles presented his research that introduced a control mechanism where the controller is fed information several steps ahead. This means it can use information on where the end effector needs to go next to configure the robot in the optimal position to move through the trajectory. You can explore Dr Christos Bergeles’s publications here.

This control method is using the null space of the robot to optimise trajectory planning. The concept of null space refers to a subspace within the robot’s configuration space where certain control variables, for example link position, have no effect on the primary task being performed. The null space represents additional degrees of freedom that can be manipulated independently without affecting the desired motion or task execution.

To understand the null space concept, consider a robot arm navigating to a target position. The robot’s configuration space consists of all possible joint angles and positions that the robot can adopt. The null space encompasses the remaining degrees of freedom in the configuration space that are not directly involved in the primary task. These degrees of freedom can be exploited to optimise secondary objectives or improve the robot’s performance. For example, in the case of a robot arm, the null space can be used to maximise stiffness in a particular direction or maintain a certain pose.

By taking advantage of the null space in concentric tube robots, their capabilities can be enhanced to optimise control or other physical properties such as stiffness. It provides a valuable resource for improving robot performance and flexibility without compromising the primary task execution.

An interesting area of future investigation will be incorporating clinical and task context into the optimisation functions and creating algorithms that can optimise the robot’s pose based on the system understanding of the task.

This could, for example, be taking a probabilistic approach to the next end-effector position and selecting the current pose based on the likely next target position, or by optimising the tube configuration to provide additional stiffness at certain points in a procedure. The combination of null-space, contextual knowledge and advanced control algorithms could have significantly more impact on development of autonomous robotic solutions than simply having better sensors.

Medical robotics: where next?

We look forward to continuing our analysis of these topics and the multitude of others driving robot assisted surgery forward within the industry. We want to thank the Hamlyn Centre for its continued partnership in allowing us to play an integral part of their symposium. We are already looking forward to seeing what 2024 holds.

And as always, we would love to hear your thoughts or debate these topics further. Please reach out to us to arrange a time to chat with our digital surgery team.