At CC, we’re taking physical AI from deep tech into real-world impact. Human-robot interaction (HRI) lies at the heart of that journey.

A major step change in robotics is underway. Physical AI, meaning AI that can operate in the physical world, is experiencing a surge in interest and investment that shows no signs of slowing – and robotics is one of the major application areas for it. With the potential to transform industries ranging from industrial automation and manufacturing to healthcare and domestic services, robots enabled by physical AI promise game-changing solutions for how we work and live.

As physical AI seeks its ideal form, humanoid robots are becoming a popular platform for general purpose robotics. Our world is built for the human form, so it makes sense that humanoid robots can more easily navigate and operate within it. Naturally, this makes interaction between humans and robots inevitable across a wide range of applications, from working alongside employees in a warehouse to helping with chores at home.

For physical AI to realise its potential and be truly useful, it must do more than function – it must understand our needs. The real value lies not just in the capabilities of these systems, but in how effectively they interact with people. It’s this human-centred interaction, enabled by HRI integration, that will elevate physical AI from a tool to a trusted teammate – one that supports, assists and empowers your human team.

Our roadmap for true human-robot collaboration

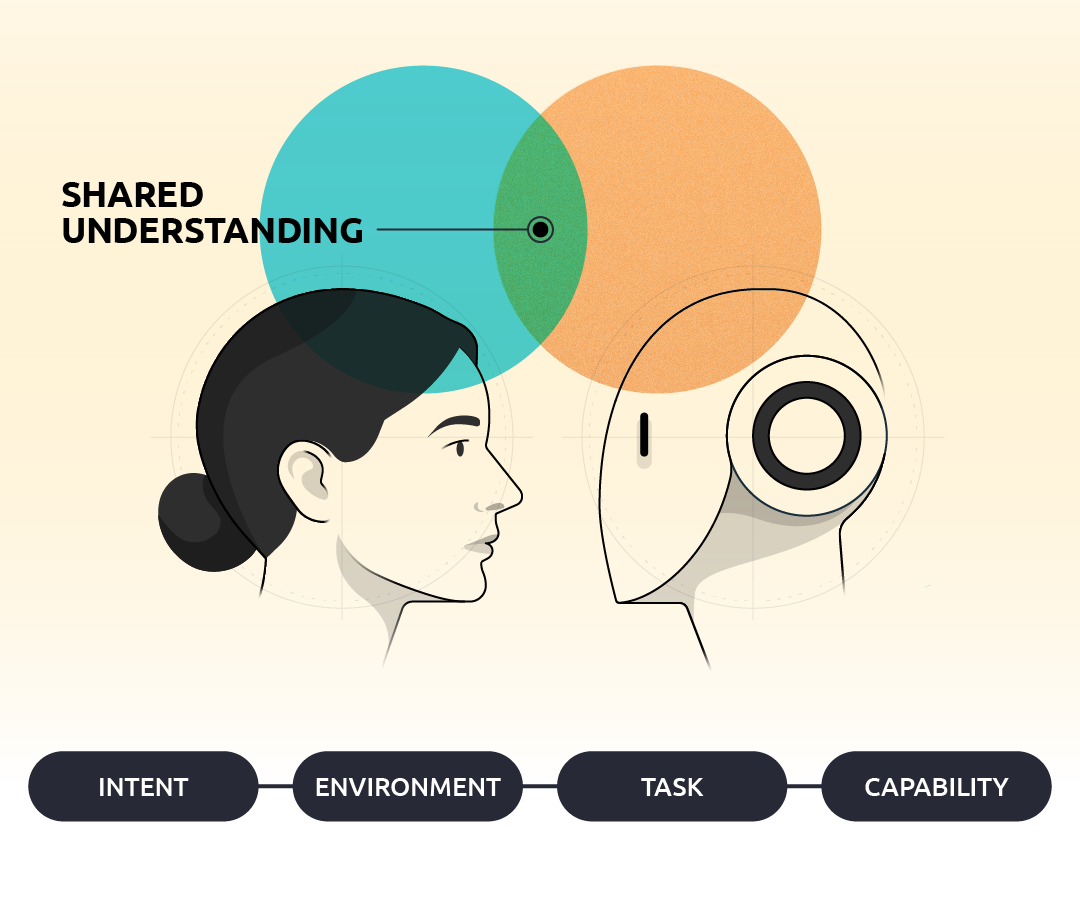

To understand what it takes for a robot to be a good teammate, we first need to look at what makes a good human teammate. Effective collaboration between people is built on a shared mental model and a common understanding of the context in which they operate. This involves a shared understanding of four key components:

- Environment: What is this space for, what objects and tools are within it, and what can we use them for?

- Task: what are we trying to achieve, and what steps are required?

- Capabilities: what is each of us able to do and where are our limits?

- Intent: what are you trying to do right now, and how does that align with what I’m doing?

Without this shared foundation, teaming is inefficient and trust is impossible. With it, seamless collaboration becomes a reality.

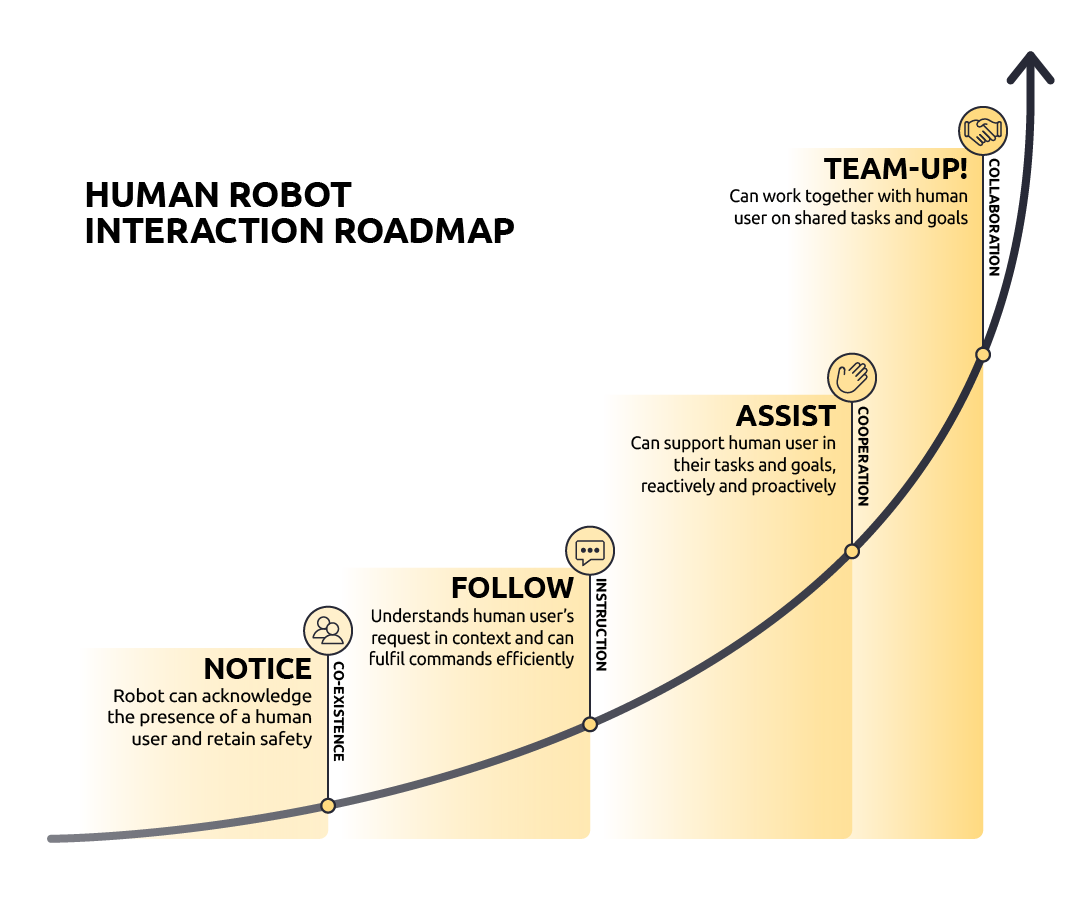

Humans build up the ability to form such a shared understanding from childhood and naturally apply it in our daily interactions with others. For robots to catch up, we need a clear roadmap for human-robot interaction. This roadmap progresses through four key stages which progressively increase in sophistication:

Stage 1: Coexistence: This is the foundational step where a robot can safely and efficiently operate alongside, but independent of, humans. For example, in a warehouse the robot must understand that humans are present and predict their likely paths to navigate safely around them without constant, inefficient stops. This is already a step beyond most current industrial systems, which often require robots to be fenced off, separate from human workers.

Stage 2: Instruction: At this level, the robot understands natural human commands in context. This goes beyond rigid graphical user interfaces or structured verbal commands to interpreting a natural mix of free speech, pointing, looking and other natural gestures. This creates a shared understanding of the task and environment, allowing a user to simply say commands like “pick that up, put it over there”, mixed with non-verbal cues such as pointing to be fully understood.

Stage 3: Cooperation: Here, the robot and human work towards the same goal on interconnected but separate tasks. The robot can proactively assist, such as anticipating the tools a human may need for the next step in a task and bringing them without being asked.

Stage 4: Collaboration: This is where humans and robots work together on a shared task with a shared understanding of goals and processes. This could be assembling a product together in a factory or stacking a dishwasher together at home.

While these four stages build on each other in sophistication, the ultimate goal isn’t simply to reach ‘collaboration’ with every robot. Instead, this roadmap represents a menu of capabilities. The real mastery of HRI lies in having the full spectrum of interaction modes available, allowing us to select the appropriate level of interaction for a given task, user and environment. A warehouse robot moving boxes may only need to ‘notice’ human workers to be safe and efficient, while an assistant in the home might need to ‘cooperate’ on a task. The key is deploying the right capability for the right context, ensuring both optimal performance and trust.

Achieving this level of contextual relevance is what makes advanced HRI uniquely challenging, as well as valuable. It requires more than just advanced perception to understand a scene, and more than just classical control to move a robot through it. The goal of HRI is to build a shared understanding between the human and the machine, giving the system the ability to infer a person’s immediate intent from their behaviour and understand their needs in relation to the physical space and the task at hand.

This deep, contextual intelligence transforms machines from reactive, pre-programmed tools into proactive collaborators, driving new levels of safety, efficiency and industry adoption.

Human-robot interaction in action at CC

Our physical AI team are actively advancing our capability along this roadmap. Our current systems are already performing beyond the ‘follow’ stage, while ongoing R&D is focused on working towards the ‘team up’ stage.

See it in action with our demo showcasing the ‘follow’ stage, demonstrating natural interaction between human and robot without the need for extra sensors on the user or in the room, instead using only the robot’s onboard camera and microphone.

In the demo, we see the user doesn’t need to use structured commands and can instead use a natural mix of verbal and non-verbal cues. The user asks the robot to move a cup. When a second cup is introduced, the robot identifies the ambiguity and, instead of failing, asks for clarification. The user resolves the ambiguity by pointing and the robot confirms the instruction and proceeds to complete the task.

This is a simple but powerful example of achieving shared understanding of the user’s intent, the environment and the spatial constraints of the task at hand. It also highlights the key challenges in building advanced HRI. First, perception quality acts as the foundation. Even the most advanced reasoning and control systems are undermined by a weak perception pipeline, and reliably sensing dynamic humans is a uniquely difficult part of this problem.

But the second, more complex challenge is managing unstructured, unchoreographed interactions. How can a system allow a user to speak and gesture freely, as they would with a human teammate, while still guaranteeing safe, robust and reliable responses every time? Solving this requires an architecture that can make sense of ambiguous, multi-modal human behaviour in real-time to determine the best way forward.

Advancing the roadmap to human-robot collaboration

It’s clear that achieving realistic general-purpose collaboration with robots demands robust HRI integration. This means enabling unstructured, free-form interactions that remain safe, reliable and responsive in real-world settings.

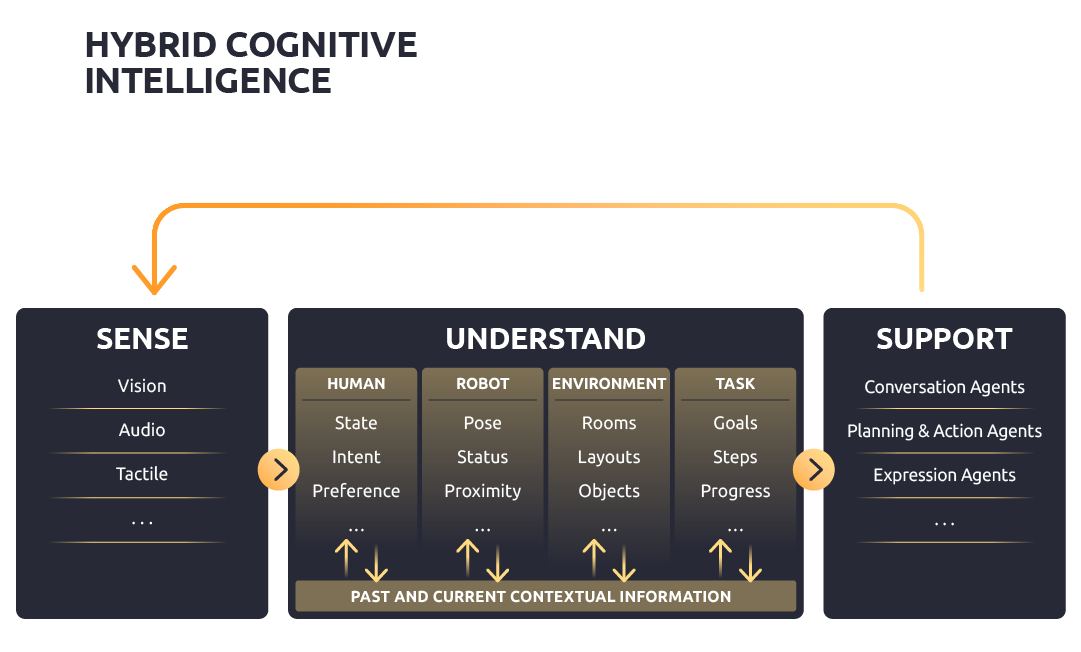

Our solution is a hybrid cognitive intelligence system that forms the backbone of our HRI roadmap, structured around a simple yet powerful framework: sense → understand → support.

Sense: The system continuously gathers multimodal data from its surroundings to perceive the current state of the human user, the robot, the task they’re working on and the environment they are in.

Understand: This is the core of creating a shared mental model between the human and robot. The system builds a deep contextual understanding of four key areas (environment, task, intent and capability for the human and the robot). The system retains the context as captured by these models in structured knowledge bases, such as knowledge graphs and ontologies.

Support: A multi-agent system uses this shared understanding to make decisions. Separate agents for manipulation, conversation, planning and locomotion work together, pulling from the contextual understanding to decide how best to control the robot to support the user.

This architecture, which we explore further in our human-machine understanding report with Capgemini, combines powerful agentic AI with advanced modelling tools that help the system understand its environment, the task at hand and the players (both human and robot) involved. It also uses structured knowledge bases to keep track of important context throughout the interaction.

By holding onto this context in a clear and organised way, the system can coordinate its AI models, decision-making agents and sensors more effectively for a clearer picture of the task at hand. This structure makes the system’s reasoning more transparent, robust and reliable, showing us exactly what information it used to make a decision so we can understand why it did what it did.

Are you ready for a new member of the team?

The leap forward in physical AI must be matched by a leap forward in HRI. Building robots following our roadmap to coexist, take instruction, cooperate and collaborate will redefine how we work across industries, from manufacturing to healthcare.

The question now isn’t whether robots can join our teams, but how ready we are to welcome them. So ask yourself, are you building a tool, or a teammate?

Follow along with our development as we continue to explore the future of HRI and physical AI.